Building a renderer of my own, experimenting with various CG techniques and API tricks, has been a long-standing goal for me, yet it always somehow managed to remain unattainable - every time I had to put my work on hold for one reason or the other, and afterwards the code from before looked like outright garbage and the only impulse was to re-write everything again and again. This post is the first in (hopefully) series of blog entries that document yet another attempt of mine. This time, however, I will try to write things down after every milestone and reflect on the work I have done. This time I will try to make something that will finally go beyond my previous feeble attempts.

Core design ideas

At the time of writing everything I write about is already implemented, thus giving me the opportunity to see it as wholesome block of work and formulate the ideas that drove me to do things this way and not the other. At this point, core principles and ideas for the project seem to have taken shape, so here we go:

- Vulkan-first-and-only. No abstraction layers to accomodate other APIs, not even trying to support OpenGL or D3D11 - by the time this work finds any application other then experimenting, relevance of these APIs is likely to diminish. For my puproses, Vulkan offers enough cross-platformity (while I do work on Windows, Vulkan offers switching to Linux or MacOS while keeping much of the codebase) and flexibility (lots of low-level core features + massive number of extensions) to cover it all. I have also decided to go with Vulkan 1.3 feature set (whenever I want to), because for a hobby project I see little sence in explicitely trying to support the entire range of platforms out there;

- Explicit reliance on Vulkan SDK. As of late, the SDK comes with a healthy set of pre-built libraries, that allows developing applications with little to no other dependencies. That includes: Vulkan APi itself, SDL2 for window creation and input processing, GLM for math, shaderc or DXC for shader compilation, SPIRV-Reflect for reflection data (actually, I do not use this one yet, but it is very likely that I will);

- (Simplified) C++ as a langugage of choice. While I applaude Vulkan for being C API first, I have come to understand, after many prior attempts, that explicit nature of C API will greatly slow me down and reduce motivation, if not augmented with some C++ convenience constructs. For that purpose I did even give a try to VulkanHPP (also bundled with SDK), but the compile time has grown to be uncomfortable real fast with it, and I had no real desire to spend much time setting it up in more or less optimal way. Also, I am not too crazy about most of modern C++ features, meaning that I personally don't see them as bringing too much value to the table. So, for this project I decided to use Vulkan C API, but with my own convenience wrappers for a bit faster development;

- Greatly simplified error handling. Simplified to a point, that, when something fails, the application simply crashes or breaks into debugger - optionally, with some message to standard output (handy for shader compilation). Vulkan validation layers are not explicitely listed in the code - instead, Vulkan Configurator is used to inject necessary validation layers when testing;

- Lua scripts for setting up the scene. I have done much work embedding Lua in the past and was constantly amazed how simple yet powerful of a tool it can be. That settles it: instead of loading assets from established formats, I will initially create scenes from Lua scripts, offering basic functionality like placement of simple primitives (quads, cubes, spheres etc), specifying projection and light parameters;

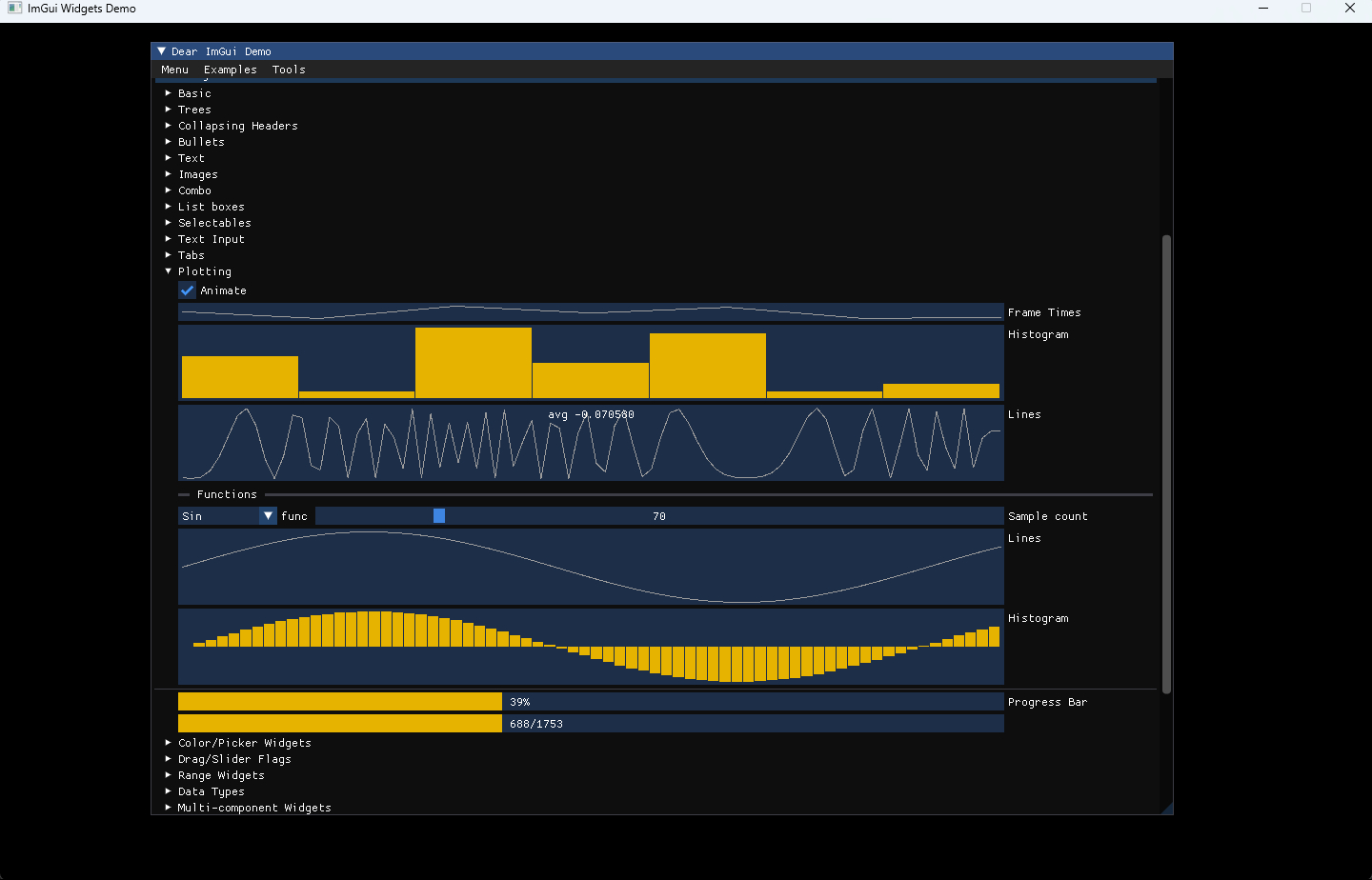

- Dear ImGui for UI. Again, a tool I have been using for some years now, and been happy about it. Bonus point: integration example into SDL2 application is available in the library repository (the rendering part will still be my own).

Implementing points above geneated a significant amount of boilerplate code, which I tried to push into re-usable classes and structs, with varynig degree of success. I am not going to write down all the details, but I feel compelled to list at least some conceptual descriptions of these:

- APIState and APIClient are means of re-using Vulkan objects that are required for virtually anything: like allocation callbacks, physical and (especially) logical device. Classes inherited from APIClient are constructed with a reference to APIState which allows them to use shared state;

- Context is a wrapper around command queue, command pool which allocates for this queue and a collection of command buffers allocated from the pool. Flipping context means switching between command buffers use for recording;

- PipelineBarrier is what is says - execution barrier wrapper that can also manage buffer and image memory barriers;

- Buffer and Image wrapper classes around respective resources. Both use non-augmented vkAllocateMemory to allocate their own storage (no VMA for now, hobby project is unlikely to go up to 4096 unique allocations anyway, maybe later...);

- BindingTableLayout and BindingTable to simplify working with descriptor sets (to a degree). BindingTable (wrapper around descriptor set) uses heap allocation to acquire memory for descriptor writes, accumulate and submit them;

- ShaderCompiler is a wrapper around compiler library (shaderc at the time of writing) that generates VkShaderModule objects;

- Window wraps around SDL2 window and input processing, and also controls Vulkan swap chain lifecycle (create/present/destroy);

- Application is a centerpiece of my framework, meant to be used by derived projects to setup their basic infrastructure;

- Multiple small wrappers around Vulkan C API structs, doing nothing but setting sType, pNext and flags fields to their default values (especially structure types, these get irritatingly wrong sometimes).

ImGui renderer

While window system integration and input processing are a rip-off of the ones provided by the repository as an example, rendering itselft was implemented from scratch. Here and further on, I do not plan to describe implementation in full detail, just some bullet points about the stuff I find significant:

- While internally dynamic rendering is used to set up graphics command environment, there is an option to skip that and rely on it being setup externally. No layout transitions are performed though - this has to be done outside of ImGui renderer;

- Vertex and fragment shaders are externally compiled and embedded into the code as binary data arrays (this comes from the fact that I have not properly implemented ShaderCompiler wrapper class before working on ImGui renderer, was too much in a hurry to get something onto display);

- A single descriptor set is allocated with a single descriptor binding in it - a combined image and sampler for ImGui font texture atlas. Written once upon initialization and used further on;

- Projection matrix for vertex shader is passed via push constants (64 bytes total, quiet a lot for push constants). In retrospective: no need for that, ImGui seems to rely only on scale and translation, passing two vec2's would suffice (16 bytes, much better), so this

layout(push_constant) uniform Params { mat4 Transform; }; ... gl_Position = Transform * vec4(InPos, 0.0, 1.0);would transform into this:layout(push_constant) uniform Params { vec2 Scale, Translation; }; ... gl_Position = vec4((InPos * Scale) + Translation, 0.0, 1.0); - Memory size for vertex/index buffers is static, unlike my previous implementations. Can be configured when initializing the renderer, with 1MB minimal size. Done this way just to reduce initial complexity a little bit. Now, if we generate some widgets using built-in ImGui::ShowDemoWindow(), following gets rendered:

First scripted scene

Scene description "language" I have implemented is based on the one suggested by an online corse I took a while time ago. The workflow mimics the approach of OpenGL 1.x: we call push/popTransfrom() to move between levels of transform nesting, use functions named translate(), rotate() and scale() to apply transformations to the matrix currently on top of the stack. Function quad_mesh() creates a quad in the scene using transform on top of the stack. Projection and viewport matrices are defined using perspective().

To get the script to work, a variable called __main__ must be created, containing an array of three functions. First one will be called when scene is initialized, second on every update tick, third when shutting down. Any of them can be defined nil, if it is not necessary.

-- First Quad

function startup()

quad_mesh()

perspective({0, 0, 4}, { 0, 0, 0 }, { 0, 1, 0 }, 60)

end

__main__ = {

startup,

nil,

nil

}

Feeding this into a renderer produces the result:

One thing that one should remember - some math libraries, like GLM, generate right-handed perspecive matrix assuming Y axis pointing up, while Vulkan has it specified to be pointing down. Thus we need to multiply elements of the second row (not column!!!) by -1. For perspective projection this row has only one non-zero elemement, which is at (1,1).

Bindless data feed

The only implementation highlight I want to describe at this point is bindless approach to uniforms data, which eliminates need for descriptor sets completely at this point. So if we have two structs, PerFrameData and PerObjectData (containing respective uniform values), then in GLSL we declare them as follows:

layout(buffer_reference, std430) buffer PerFrameData

{

... data members ...

};

layout(buffer_reference, std430) buffer PerObjectData

{

... data members ...

};

layout(push_constant) uniform Params

{

PerFrameData frm;

PerObjectData obj;

};

Buffer reference is represented by VkDeviceAddress value in the API. This value is (typically) 8 bytes long, and can be obtained using vkGetBufferDeviceAddress() function (core in version 1.2, otherwise provided by VK_KHR_buffer_device_address). Pointer arithmetic can be applied to VkDeviceAddress as well, which we use to calculate data position for each draw call and pass it via push constants (doing it per draw call is not the most optimal way, but faster to get working).

So far so good...

This and, (hopefully) every subsequent chapter will have a link to a repository tag which contains everything that has been implemented so far. This chapter is availeable here: https://github.com/eugen2891/vulkan-apps/tree/chapter01.

I read through this article after writing the bulk of it, and it struck me how much work it has been with relatively little to show for. What matters now is to keep working on it regardless - otherwise it will be yet another half-baked thing thrown out of the window. For example, my first idea was to create a variation of Cornell box as a default test scene - but, as I quickly found out, I have completely forgotten about depth testing, which made the result look, well, strage... But this is a material for another chapter already, which should be coming soon. Sic Parvis Magna!